Understand the key differences between AI supercomputing platforms and traditional supercomputers. Explore how they differ in purpose, architecture, and software design, explaining why learning-driven workloads reshaped high performance computing.

There was a time when supercomputers used to be the apex of raw computational power. From equations to complex simulations, academics used supercomputers to translate physical systems into maths. These machines executed those instructions at scale. It was this access to such power that shaped decades of progress in climate science, physics, and engineering.

But AI workloads have now entered the scene and they need a different model. AI has introduced uncertainty, iteration, and data volumes that refused to fit inside this equation-first thinking. Instead of calculating answers, machines began to learn behaviours. And it was this shift that forced a structural break.

AI supercomputing exists because classical high performance computing could not stretch far enough to meet the demands of modern machine learning. The two systems now operate side by side, optimised for different definitions of intelligence and performance.

The difference of purpose: Traditional supercomputers vs AI supercomputing platforms

➢ Traditional supercomputers

Traditional supercomputers came in to solve specific problems that humans already understood conceptually but simply didn’t have the calculation capacity. Scientists describe the system using equations and define the constraints. The machine evaluated outcomes across massive parameter spaces. These workloads had a massive emphasis on accuracy and numerical stability. If the same input enters the system twice, the same results must emerge. Any deviations from this signal error.

This design supports weather forecasting, seismic analysis, molecular simulations, aerospace modelling and much more. The problem definition remains stable. It is only the scale and resolution that increase.

➢ AI supercomputing platforms

AI supercomputers work on a very different philosophy. Here engineers don’t need to define every step; they just have to define the goals. The system improves by adjusting internal parameters as it processes data. This process depends heavily on exposure to more data than instruction. The model refines itself through repetition, probability, and error correction so the results vary during training. Here improvement is more important than consistency.

This approach is much suited for language models, vision systems, recommendation engines, and autonomous decision-making. The system evolves as the data grows.

Purpose alone explains how traditional supercomputers are different from AI supercomputing platforms.

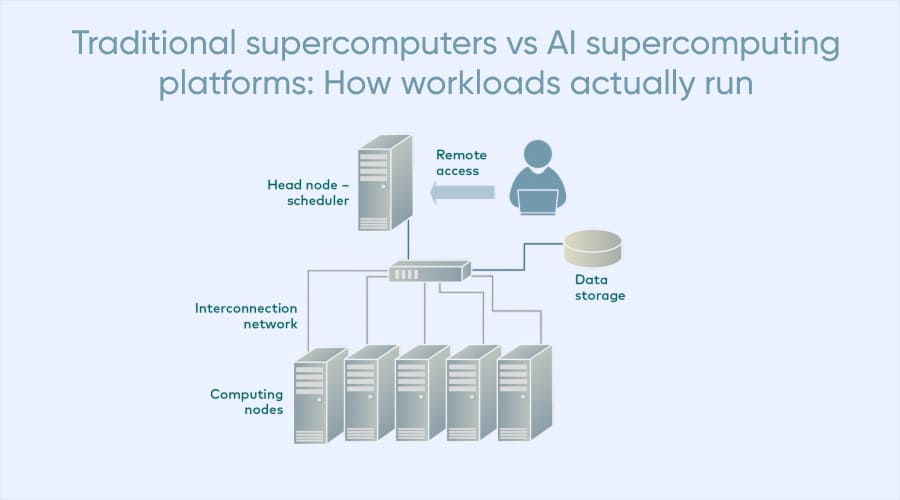

Traditional supercomputers vs AI supercomputing platforms: How workloads actually run

➢ Deterministic execution in traditional supercomputers

Traditional supercomputers work with deterministic workloads where each process has to follow a defined logic path. Parallelism distributes the workload across multiple processors but the control flow remains explicit.

The system expects predictable memory access and stable communication patterns. Engineers optimise for throughput without sacrificing precision. This structure fits simulations where one calculation depends directly on the previous one.

➢ Iterative optimisation in AI systems

AI workloads rely more on repeated approximation. Each training step evaluates the error and adjusts parameters slightly. The system repeats this loop millions or billions of times. The workload tolerates imprecision during intermediate steps. What matters is convergence and learning in every iteration.

This structure needs extreme parallelism across simple operations. Control logic does not matter here as data movement and arithmetic throughput dominate performance.

Traditional supercomputers vs AI supercomputing platforms: Hardware philosophy

➢ CPUs define traditional supercomputing power

Traditional supercomputers rely heavily on CPUs. These processors are used to handle complex branching logic, high precision calculations, and sequential dependencies efficiently. CPU-centric systems support a diverse range of workloads as they are able to adapt to a wide range of problems without specialised tuning.

However, this versatility comes at a cost. CPUs cannot match accelerators when workloads involve massive repetition of simple mathematical operations.

➢ Accelerators define AI supercomputing

AI supercomputing platforms leverage GPUs, TPUs, and neural processors. These chips are able to execute thousands of identical operations in parallel. Modern machine learning depends heavily on matrix multiplication and tensor operations. Accelerators perform this work quite efficiently because they sacrifice control complexity in favour of raw throughput.

AI systems do not treat accelerators as optional components. They form the core of the architecture. CPUs handle the orchestration and the accelerators do the learning.

Traditional supercomputers vs AI supercomputing platforms: Memory requirements

➢ Segmented memory for traditional supercomputers

Traditional supercomputers separate memory across nodes. Each process accesses its local memory and communicates with others through structured messaging. This design works great when data dependencies remain predictable so engineers have the options of planning communication carefully so as to avoid any process bottleneck. The model assumes that computation dominates runtime.

➢ Bandwidth-centric memory in AI systems

AI workloads like training models require constant access to shared parameters. Any delay in memory access slows learning directly. AI supercomputers respond with high bandwidth memory tightly coupled to accelerators. Many systems will reduce or eliminate separation between compute and memory pools.

Bandwidth replaces latency as the dominant concern. Keeping the accelerators fed with data matters more than minimising individual access times.

Traditional supercomputers vs AI supercomputing platforms: Data requirements

➢ Structured inputs for traditional supercomputers

Traditional supercomputing thrives on structured data and the variables follow the defined formats. Inputs conform to known distributions. Thanks to this predictability, traditional supercomputers can offer aggressive optimisation. Engineers know where the data lives and how it flows.

➢ Chaotic inputs for AI supercomputing platforms

AI systems take in unstructured data at scale. Text, images, audio, and video arrive with inconsistencies and missing context. Before the computation begins, the system tokenises, normalises, encodes, and batches data efficiently. Therefore, preprocessing becomes a critical performance factor.

AI supercomputers treat data pipelines as first-class components so storage, networking, and preprocessing hardware receive as much attention as compute units.

Traditional supercomputers vs AI supercomputing platforms: Software ecosystems

➢ Traditional supercomputer software priorities

Classical supercomputing relies on mature programming models made for stable performance even under the most intense loads. Devs write low-level code optimised for performance and predictability.

Therefore, software changes are adapted quite slowly. Researchers value consistency over anything else. Breaking changes carry a high cost. This ecosystem rewards careful planning and long execution cycles.

➢ AI frameworks favour speed and flexibility

AI software is evolving at a staggering space as engineers constantly experiment and modify architectures to retrain models. Frameworks abstract hardware details to speed development. They integrate deeply with accelerators and memory hierarchies. This pace is what makes it different from traditional supercomputing environments. Adapting AI frameworks to classical systems introduces friction and performance penalties.

Rather than force compatibility, the industry built parallel ecosystems.

Traditional supercomputers vs AI supercomputing platforms: Energy and cost differences

➢ Fixed cost models in traditional supercomputing

Traditional supercomputers need an enormous upfront investment. Governments and research institutes fund these systems for strategic purposes. Once built, they are made to work nonstop. Facilities need to invest heavily in cooling, power delivery, and maintenance.

It is the long term scientific output that justifies the expense.

➢ Flexible economics of AI supercomputing

AI supercomputing platforms work very differently. Training large models consumes massive energy over short periods. Organisations measure efficiency per training run. Accelerators deliver much higher performance per watt for parallel workloads.

Cloud based AI clusters allow organisations to scale compute dynamically so they pay only for usage and not infrastructure ownership.

Traditional supercomputers vs AI supercomputing platforms: Scalability options

➢ Vertical scaling with traditional supercomputers

Traditional supercomputers are hard to scale. Much careful engineering is needed for architects to design tightly integrated systems with custom interconnects. Expansions of these traditional systems need long planning cycles and significant investment because the entire system needs to grow as a single entity.

➢ Horizontal scaling in AI clusters

AI supercomputing favours modular design. Organisations add accelerator nodes as demand increases. Networking remains important too but the architecture tolerates incremental growth. This flexibility matches the unpredictable growth of AI models.

Why large language models reject classical supercomputers

Large language models expose much of the limits of traditional supercomputers. Training these models requires continuous matrix operations across billions of parameters and accelerators handle this workload naturally. CPUs alone cannot match the throughput even at scale.

Here memory bandwidth becomes the real bottleneck. Accelerators pair computation with memory designed for sustained data flow.

Software adds another barrier here. AI frameworks assume accelerator-first environments. Retrofitting them into classical supercomputers reduces much of the needed efficiency. As a result, organisations choose specialised GPU and TPU clusters for AI training.

Traditional supercomputers still matter

Traditional supercomputers continue to power national research initiatives. Countries are investing heavily in them to advance science and infra-planning. AI supercomputing platforms have emerged to tackle a very different mission. It fuels commercial intelligence and automation.

The global distribution of supercomputers reflects geopolitical priorities. AI clusters reflect market demand. Both systems remain essential for us. They are different because their goals are different.

Two philosophies of computation

AI supercomputing platforms are not an evolution from the traditional supercomputers that we already have. Think of it more as a different interpretation of what machines do.

Traditional systems calculate answers from a given set of rules, while AI systems discover behaviour from data. Both rely on massive parallelism. Beyond that shared foundation, their design principles diverge sharply.