Learn how to build an AI supercomputing platform from scratch as we cover the complete step by step guide to set up a high performance AI system for generative AI and machine learning workloads.

When you want to build an AI supercomputing platform, it needs massive planning and technical rigour. Unlike standard AI applications that we see all over, these platforms need advanced infrastructure, optimised workflows, and strong security to handle all of these massive data sets and high compute workloads.

So how do you build one? We bring you a comprehensive guide that covers the step by step framework to make a high performance AI platform capable of powering generative AI, machine learning, as well as deep learning workloads. Here’s everything you need to know when getting started.

Analysing the core requirements

Before jumping straight into investing in hardware or software, the very first thing you need to do is define the objectives of your AI platform. Identify the type of AI workloads it is supposed to handle. This can include things like training large language models (LLMs), processing computer vision tasks, or running reinforcement learning experiments. Setting the goals is essential as we will see from this example. Suppose you want to create a platform for deep learning training. This will need multiple GPUs with super high memory bandwidth. But in case you want to work with inference workloads, you will need CPU-GPU hybrid configurations. So you can see just how important it is to understand the goals before investing.

Next, you determine your data strategy. AI models generally thrive on large, high quality datasets. Unstructured data like texts, images, and videos must be collected, validated, and preprocessed. Create pipelines to ensure data consistency. Also define your storage solutions to optimise for read/write operations. Enterprise scale AI platforms typically handle terabytes or even sometimes, petabytes of data which need distributed storage solutions and ultra high speed networking.

Choosing the right hardware architecture

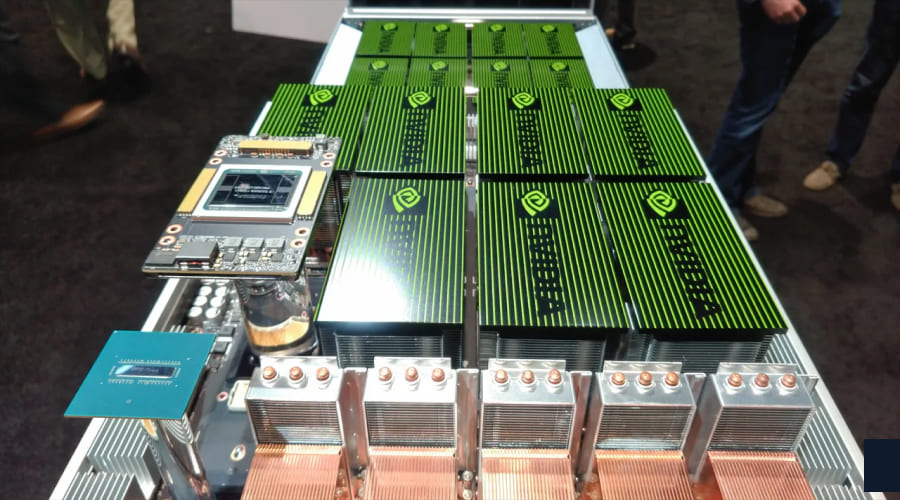

AI supercomputing platforms rely on heterogeneous hardware structures. At their very core, these systems combine high performance CPUs or GPUs. Some even have specialised accelerators like TPUs or NPUs. GPUs dominate this space of AI training all thanks to their parallel processing power which is super important for those large neural networks. So when you are designing the system, be sure to:

- Cluster GPUs: Multiple GPUs must be interconnected using high bandwidth links such as NVLink or InfiniBand to minimise latency during model training.

- Ensure memory scalability: Large models will need to have hundreds of gigabytes of VRAM. Prioritise GPUs that have super high memory capacity and plan for memory pooling.

- Optimise storage: Use NVMe SSDs for high speed I/O. This will be particularly helpful when feeding data into the model during training. Hierarchical storage systems combining SSDs and HDDs balance speed and cost.

- Networking: For distributed computing, integrate low latency, high throughout networks to sync computations across nodes effectively.

Finally, consider cooling and power. AI supercomputing platforms will generate huge heat so liquid cooling systems, combined with redundant power supplies are quite necessary to maintain stability even under the most intensive workloads.

Building the software stack

Hardware alone is not enough to get you the desired AI performance. Constructing an AI platform requires a software stack that helps with computation and storage. Here are the basics

OS and drivers

Linux based operating systems are the way to go as these dominate almost the entirety of the AI supercomputing space thanks to its stability and flexibility. Install and configure GPU drivers and CUDA or ROCm libraries. These frameworks get you optimised routines for tensor computations essential in neural network training.

AI frameworks

Now you need to choose an AI framework that will align perfectly with your workloads:

- TensorFlow or PyTorch for deep learning and neural networks.

- Hugging Face Transformers for LLMs and generative AI.

- JAX or ONNX Runtime for experimental or highly optimised pipelines.

Ensure that the chosen frameworks are compatible with distributed computing tools such as Horovod or PyTorch Distributed, which scale super well across multiple GPUs or nodes.

Containerisation and orchestration

Containers are great at simplifying deployment and dependency management. Use Docker to package AI workloads and Kubernetes to orchestrate multi node clusters. Kubernetes allows dynamic scaling and fault tolerance, essential for large scale AI experiments.

Data management and preprocessing

Data will form the backbone of AI. Establish a really robust data management strategy using:

- Data ingestion: Implement ETL pipelines to ingest raw data from multiple sources.

- Preprocessing: Normalise, tokenise, or even transform data according to the requirements of the model.

- Data storage: Use distributed file systems or object storage solutions optimised for throughput.

- Vector databases: For generative AI, store embeddings efficiently to support rapid retrieval for tasks like retrieval augmented generation (RAG).

With high quality preprocessing, you reduce training time significantly and minimise downstream errors. This improves your model accuracy.

Training AI models at scale

Model training consumes the majority of resources in an AI supercomputing environment. Follow these steps carefully to train models efficiently.

- Foundation model training: Train large models on raw, unlabeled datasets. This step will need high performance GPUs or TPUs and can run for weeks. For a more cost adaptable approach, you can always consider open source foundation models.

- Fine tuning: Adapt models for specific applications using labelled datasets. Fine tuning will help the model optimise for tasks like natural language generation or image synthesis.

- Reinforcement learning and evaluation: Employ reinforcement learning with human feedback (RLHF) to improve generative AI performance. Regularly evaluate outputs against benchmarks to ensure model reliability.

- Distributed training: For massive models, distribute computation across multiple GPUs and nodes using frameworks that sync parameters more efficiently.

Training will also need iterative evaluation and hyperparameter tuning. Automating these processes through workflow pipeline accelerates development.

Implementing security and compliance

For AI systems, security is critical as vulnerabilities can arise in the AI model or codebase. These can even appear in your data pipelines so you will need to implement these best practices:

- Limit access to sensitive data and ensure proper encryption.

- Validate generated code from AI systems to prevent vulnerabilities.

- Maintain human oversight in critical decision making loops.

- Monitor supply chain integrity to ensure third party data and software are trustworthy.

- Guard against model hallucinations or biased outputs by continuous auditing.

Compliance with data privacy regulations is super important when handling any type of personal or sensitive data in AI applications.

Optimising performance and scalability

AI workloads are known to scale dramatically. Make sure that the platform is able to maintain efficiency as demands increase:

- Use hybrid AI models: Combine multiple architectures to optimise accuracy and speed.

- Leverage automated CI/CD pipelines: This will help deploy updates with minimal downtime.

- Integrate performance monitoring tools: These are going to be quite essential in finding bottlenecks and dynamically allocating resources.

- Edge deployment: Helps to reduce latency drastically for applications that need real time inference.

- Scalable platforms: should be able to adapt to evolving AI models, dataset sizes, and application requirements without any costly overhauls.

Some real world applications of AI supercomputing

Supercomputing platforms power transformative applications.

- Healthcare: Analyse medical images, predict patient risks, and automate hospital workflows.

- Finance: Detect fraud, optimise trading algorithms, and assess financial risk.

- Retail: Deliver personalised recommendations and manage inventory with the help of predictive analytics.

- Manufacturing: Automate robotics, monitor factory floors, and predict equipment failures.

- Military and defense: Process intelligence, automate defense systems, and deploy autonomous vehicles.

Maintaining and evolving the platform

Now that you have built the platform, you have completed the first step. Continuous maintenance will ensure reliability and relevance over time.

- Regular updates: Patch OS and drivers frequently. Also check if frameworks are updated.

- Model retraining: Update AI models with new data to maintain accuracy.

- Resource scaling: Adjust GPU, CPU, and storage allocations according to your usage patterns.

- Security audits: Regularly check for vulnerabilities and compliance with regulations.

Proactive monitoring will help prevent downtime, improve efficiency, and ensure AI outputs remain accurate.

Closing thoughts

When you want to create an AI supercomputing platform, you will have to combine a whole lot of hardware expertise and software engineering, with some data science sprinkled in for good measure. So success will depend on carefully selecting these components and managing data the right way to train AI platforms more efficiently.

When done right, your platform can empower organisations to harness the full potential of AI in their corporate workflow. Follow this structured approach and teams can create the perfect platforms that are scalable, secure, and optimized for ultra high performance AI workloads.